[ English | 한국어 (대한민국) | English (United Kingdom) | Indonesia | français | русский | Deutsch ]

Contoh produksi Ceph¶

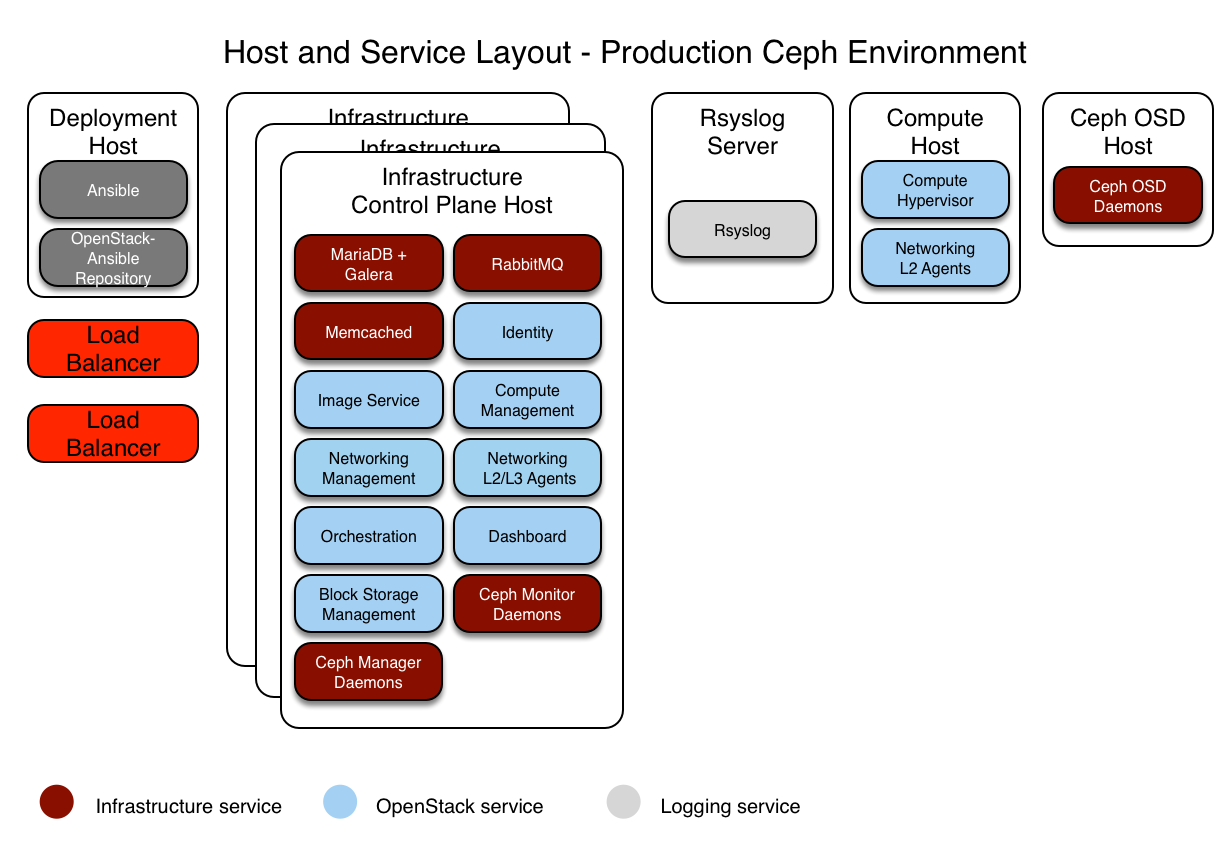

Bagian ini menjelaskan contoh lingkungan produksi untuk penyebaran OpenStack-Ansible (OSA) yang berfungsi dengan layanan ketersediaan tinggi (high availability) dan menggunakan backend Ceph untuk image, volume, dan instance.

Lingkungan contoh ini memiliki karakteristik sebagai berikut:

Tiga host infrastruktur (control plane) dengan kontainer ceph-mon

Dua host komputasi

Tiga host penyimpanan Ceph OSD

Satu host agregasi log

Multiple Network Interface Cards (NIC) dikonfigurasikan sebagai pasangan berikat untuk setiap host

Kit komputasi lengkap dengan layanan Telemetri (ceilometer) disertakan, dengan Ceph dikonfigurasi sebagai storage back end untuk layanan Image (glance), dan Block Storage (cinder)

Akses internet melalui alamat router 172.29.236.1 di Management Network

Integrasi dengan Ceph¶

OpenStack-Ansible memungkinkan Ceph storage cluster integration in two ways:

menghubungkan ke ceph cluster Anda sendiri dengan menunjuk ke informasinya di

user_variables.ymlmenyebarkan klaster ceph dengan menggunakan peran yang dikelola oleh proyek Ceph-Ansible. Deployers dapat mengaktifkan playbook

ceph-install` `dengan menambahkan host ke grup ``ceph-mon_hosts,ceph-osd_hostsdanceph-rgw_hostsdalamopenstack_user_config.yml, dan kemudian mengkonfigurasi Ceph-Ansible specific vars di OpenStack-Ansibleuser_variables.ymlfile.

Contoh ini akan fokus pada penyebaran OpenStack-Ansible dan cluster Ceph-nya.

Konfigurasi jaringan¶

Penetapan CIDR/VLAN jaringan¶

Penetapan CIDR dan VLAN berikut digunakan untuk lingkungan ini.

Network |

CIDR |

VLAN |

|---|---|---|

Management Network |

172.29.236.0/22 |

10 |

Tunnel (VXLAN) Network |

172.29.240.0/22 |

30 |

Storage Network (jaringan penyimpanan) |

172.29.244.0/22 |

20 |

IP assignments¶

Nama host dan alamat IP berikut digunakan untuk lingkungan ini.

Host name |

Management IP |

Tunnel (VxLAN) IP |

Storage IP |

|---|---|---|---|

lb_vip_address |

172.29.236.9 |

||

infra1 |

172.29.236.11 |

172.29.240.11 |

|

infra2 |

172.29.236.12 |

172.29.240.12 |

|

infra3 |

172.29.236.13 |

172.29.240.13 |

|

log1 |

172.29.236.14 |

||

compute1 |

172.29.236.16 |

172.29.240.16 |

172.29.244.16 |

compute2 |

172.29.236.17 |

172.29.240.17 |

172.29.244.17 |

osd1 |

172.29.236.18 |

172.29.244.18 |

|

osd2 |

172.29.236.19 |

172.29.244.19 |

|

osd3 |

172.29.236.20 |

172.29.244.20 |

Konfigurasi jaringan host¶

Setiap host akan membutuhkan jembatan jaringan (network bridge) yang benar untuk diimplementasikan. Berikut ini adalah file /etc/network/interfaces untuk infra1.

Catatan

Jika lingkungan Anda tidak memiliki eth0, tetapi sebaliknya memiliki p1p1 atau nama antarmuka lainnya, pastikan bahwa semua referensi ke eth0 di semua file konfigurasi diganti dengan nama yang sesuai. Hal yang sama berlaku untuk antarmuka jaringan tambahan.

# This is a multi-NIC bonded configuration to implement the required bridges

# for OpenStack-Ansible. This illustrates the configuration of the first

# Infrastructure host and the IP addresses assigned should be adapted

# for implementation on the other hosts.

#

# After implementing this configuration, the host will need to be

# rebooted.

# Assuming that eth0/1 and eth2/3 are dual port NIC's we pair

# eth0 with eth2 and eth1 with eth3 for increased resiliency

# in the case of one interface card failing.

auto eth0

iface eth0 inet manual

bond-master bond0

bond-primary eth0

auto eth1

iface eth1 inet manual

bond-master bond1

bond-primary eth1

auto eth2

iface eth2 inet manual

bond-master bond0

auto eth3

iface eth3 inet manual

bond-master bond1

# Create a bonded interface. Note that the "bond-slaves" is set to none. This

# is because the bond-master has already been set in the raw interfaces for

# the new bond0.

auto bond0

iface bond0 inet manual

bond-slaves none

bond-mode active-backup

bond-miimon 100

bond-downdelay 200

bond-updelay 200

# This bond will carry VLAN and VXLAN traffic to ensure isolation from

# control plane traffic on bond0.

auto bond1

iface bond1 inet manual

bond-slaves none

bond-mode active-backup

bond-miimon 100

bond-downdelay 250

bond-updelay 250

# Container/Host management VLAN interface

auto bond0.10

iface bond0.10 inet manual

vlan-raw-device bond0

# OpenStack Networking VXLAN (tunnel/overlay) VLAN interface

auto bond1.30

iface bond1.30 inet manual

vlan-raw-device bond1

# Storage network VLAN interface (optional)

auto bond0.20

iface bond0.20 inet manual

vlan-raw-device bond0

# Container/Host management bridge

auto br-mgmt

iface br-mgmt inet static

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports bond0.10

address 172.29.236.11

netmask 255.255.252.0

gateway 172.29.236.1

dns-nameservers 8.8.8.8 8.8.4.4

# OpenStack Networking VXLAN (tunnel/overlay) bridge

#

# The COMPUTE, NETWORK and INFRA nodes must have an IP address

# on this bridge.

#

auto br-vxlan

iface br-vxlan inet static

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports bond1.30

address 172.29.240.16

netmask 255.255.252.0

# OpenStack Networking VLAN bridge

auto br-vlan

iface br-vlan inet manual

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports bond1

# compute1 Network VLAN bridge

#auto br-vlan

#iface br-vlan inet manual

# bridge_stp off

# bridge_waitport 0

# bridge_fd 0

#

# For tenant vlan support, create a veth pair to be used when the neutron

# agent is not containerized on the compute hosts. 'eth12' is the value used on

# the host_bind_override parameter of the br-vlan network section of the

# openstack_user_config example file. The veth peer name must match the value

# specified on the host_bind_override parameter.

#

# When the neutron agent is containerized it will use the container_interface

# value of the br-vlan network, which is also the same 'eth12' value.

#

# Create veth pair, do not abort if already exists

# pre-up ip link add br-vlan-veth type veth peer name eth12 || true

# Set both ends UP

# pre-up ip link set br-vlan-veth up

# pre-up ip link set eth12 up

# Delete veth pair on DOWN

# post-down ip link del br-vlan-veth || true

# bridge_ports bond1 br-vlan-veth

# Storage bridge (optional)

#

# Only the COMPUTE and STORAGE nodes must have an IP address

# on this bridge. When used by infrastructure nodes, the

# IP addresses are assigned to containers which use this

# bridge.

#

auto br-storage

iface br-storage inet manual

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports bond0.20

# compute1 Storage bridge

#auto br-storage

#iface br-storage inet static

# bridge_stp off

# bridge_waitport 0

# bridge_fd 0

# bridge_ports bond0.20

# address 172.29.244.16

# netmask 255.255.252.0

Konfigurasi penempatan (deployment)¶

Tata letak lingkungan¶

File /etc/openstack_deploy/openstack_user_config.yml mendefinisikan tata letak lingkungan.

Konfigurasi berikut menjelaskan tata letak untuk lingkungan ini.

---

cidr_networks: &cidr_networks

container: 172.29.236.0/22

tunnel: 172.29.240.0/22

storage: 172.29.244.0/22

used_ips:

- "172.29.236.1,172.29.236.50"

- "172.29.240.1,172.29.240.50"

- "172.29.244.1,172.29.244.50"

- "172.29.248.1,172.29.248.50"

global_overrides:

cidr_networks: *cidr_networks

internal_lb_vip_address: 172.29.236.9

#

# The below domain name must resolve to an IP address

# in the CIDR specified in haproxy_keepalived_external_vip_cidr.

# If using different protocols (https/http) for the public/internal

# endpoints the two addresses must be different.

#

external_lb_vip_address: openstack.example.com

management_bridge: "br-mgmt"

provider_networks:

- network:

container_bridge: "br-mgmt"

container_type: "veth"

container_interface: "eth1"

ip_from_q: "container"

type: "raw"

group_binds:

- all_containers

- hosts

is_container_address: true

- network:

container_bridge: "br-vxlan"

container_type: "veth"

container_interface: "eth10"

ip_from_q: "tunnel"

type: "vxlan"

range: "1:1000"

net_name: "vxlan"

group_binds:

- neutron_linuxbridge_agent

- network:

container_bridge: "br-vlan"

container_type: "veth"

container_interface: "eth12"

host_bind_override: "eth12"

type: "flat"

net_name: "flat"

group_binds:

- neutron_linuxbridge_agent

- network:

container_bridge: "br-vlan"

container_type: "veth"

container_interface: "eth11"

type: "vlan"

range: "101:200,301:400"

net_name: "vlan"

group_binds:

- neutron_linuxbridge_agent

- network:

container_bridge: "br-storage"

container_type: "veth"

container_interface: "eth2"

ip_from_q: "storage"

type: "raw"

group_binds:

- glance_api

- cinder_api

- cinder_volume

- manila_share

- nova_compute

- ceph-osd

###

### Infrastructure

###

_infrastructure_hosts: &infrastructure_hosts

infra1:

ip: 172.29.236.11

infra2:

ip: 172.29.236.12

infra3:

ip: 172.29.236.13

# nova hypervisors

compute_hosts: &compute_hosts

compute1:

ip: 172.29.236.16

compute2:

ip: 172.29.236.17

ceph-osd_hosts:

osd1:

ip: 172.29.236.18

osd2:

ip: 172.29.236.19

osd3:

ip: 172.29.236.20

# galera, memcache, rabbitmq, utility

shared-infra_hosts: *infrastructure_hosts

# ceph-mon containers

ceph-mon_hosts: *infrastructure_hosts

# ceph-mds containers

ceph-mds_hosts: *infrastructure_hosts

# ganesha-nfs hosts

ceph-nfs_hosts: *infrastructure_hosts

# repository (apt cache, python packages, etc)

repo-infra_hosts: *infrastructure_hosts

# load balancer

# Ideally the load balancer should not use the Infrastructure hosts.

# Dedicated hardware is best for improved performance and security.

haproxy_hosts: *infrastructure_hosts

# rsyslog server

log_hosts:

log1:

ip: 172.29.236.14

###

### OpenStack

###

# keystone

identity_hosts: *infrastructure_hosts

# cinder api services

storage-infra_hosts: *infrastructure_hosts

# cinder volume hosts (Ceph RBD-backed)

storage_hosts: *infrastructure_hosts

# glance

image_hosts: *infrastructure_hosts

# placement

placement-infra_hosts: *infrastructure_hosts

# nova api, conductor, etc services

compute-infra_hosts: *infrastructure_hosts

# heat

orchestration_hosts: *infrastructure_hosts

# horizon

dashboard_hosts: *infrastructure_hosts

# neutron server, agents (L3, etc)

network_hosts: *infrastructure_hosts

# ceilometer (telemetry data collection)

metering-infra_hosts: *infrastructure_hosts

# aodh (telemetry alarm service)

metering-alarm_hosts: *infrastructure_hosts

# gnocchi (telemetry metrics storage)

metrics_hosts: *infrastructure_hosts

# manila (share service)

manila-infra_hosts: *infrastructure_hosts

manila-data_hosts: *infrastructure_hosts

# ceilometer compute agent (telemetry data collection)

metering-compute_hosts: *compute_hosts

Kustomisasi lingkungan¶

File yang disebarkan secara opsional di /etc/openstack_deploy/env.d memungkinkan kustomisasi grup Ansible. Ini memungkinkan deployer untuk mengatur apakah layanan akan berjalan dalam container (default), atau pada host (on metal).

Untuk lingkungan ceph, Anda dapat menjalankan cinder-volume dalam sebuah container. Untuk melakukan ini, Anda perlu membuat file /etc/openstack_deploy/env.d/cinder.yml dengan konten berikut:

---

# This file contains an example to show how to set

# the cinder-volume service to run in a container.

#

# Important note:

# When using LVM or any iSCSI-based cinder backends, such as NetApp with

# iSCSI protocol, the cinder-volume service *must* run on metal.

# Reference: https://bugs.launchpad.net/ubuntu/+source/lxc/+bug/1226855

container_skel:

cinder_volumes_container:

properties:

is_metal: false

User variables (variabel pengguna)¶

File /etc/openstack_deploy/user_variables.yml mendefinisikan global override untuk variabel default.

Untuk contoh lingkungan ini, kami mengonfigurasi penyeimbang beban HA. Kami menerapkan load balancer (HAProxy) dengan layer HA (keepalived) pada host infrastruktur. /etc/openstack_deploy/user_variables.yml Anda harus memiliki konten berikut untuk mengonfigurasi haproxy, keepalived, dan ceph:

---

# Because we have three haproxy nodes, we need

# to one active LB IP, and we use keepalived for that.

## Load Balancer Configuration (haproxy/keepalived)

haproxy_keepalived_external_vip_cidr: "<external_ip_address>/<netmask>"

haproxy_keepalived_internal_vip_cidr: "172.29.236.0/22"

haproxy_keepalived_external_interface: ens2

haproxy_keepalived_internal_interface: br-mgmt

## Ceph cluster fsid (must be generated before first run)

## Generate a uuid using: python -c 'import uuid; print(str(uuid.uuid4()))'

generate_fsid: false

fsid: 116f14c4-7fe1-40e4-94eb-9240b63de5c1 # Replace with your generated UUID

## ceph-ansible settings

## See https://github.com/ceph/ceph-ansible/tree/master/group_vars for

## additional configuration options available.

monitor_address_block: "{{ cidr_networks.container }}"

public_network: "{{ cidr_networks.container }}"

cluster_network: "{{ cidr_networks.storage }}"

journal_size: 10240 # size in MB

# ceph-ansible automatically creates pools & keys for OpenStack services

openstack_config: true

cinder_ceph_client: cinder

glance_ceph_client: glance

glance_default_store: rbd

glance_rbd_store_pool: images

nova_libvirt_images_rbd_pool: vms

cinder_backends:

RBD:

volume_driver: cinder.volume.drivers.rbd.RBDDriver

rbd_pool: volumes

rbd_ceph_conf: /etc/ceph/ceph.conf

rbd_store_chunk_size: 8

volume_backend_name: rbddriver

rbd_user: "{{ cinder_ceph_client }}"

rbd_secret_uuid: "{{ cinder_ceph_client_uuid }}"

report_discard_supported: true