[ English | 한국어 (대한민국) | English (United Kingdom) | Indonesia | français | русский | Deutsch ]

Ceph Produktionsbeispiel¶

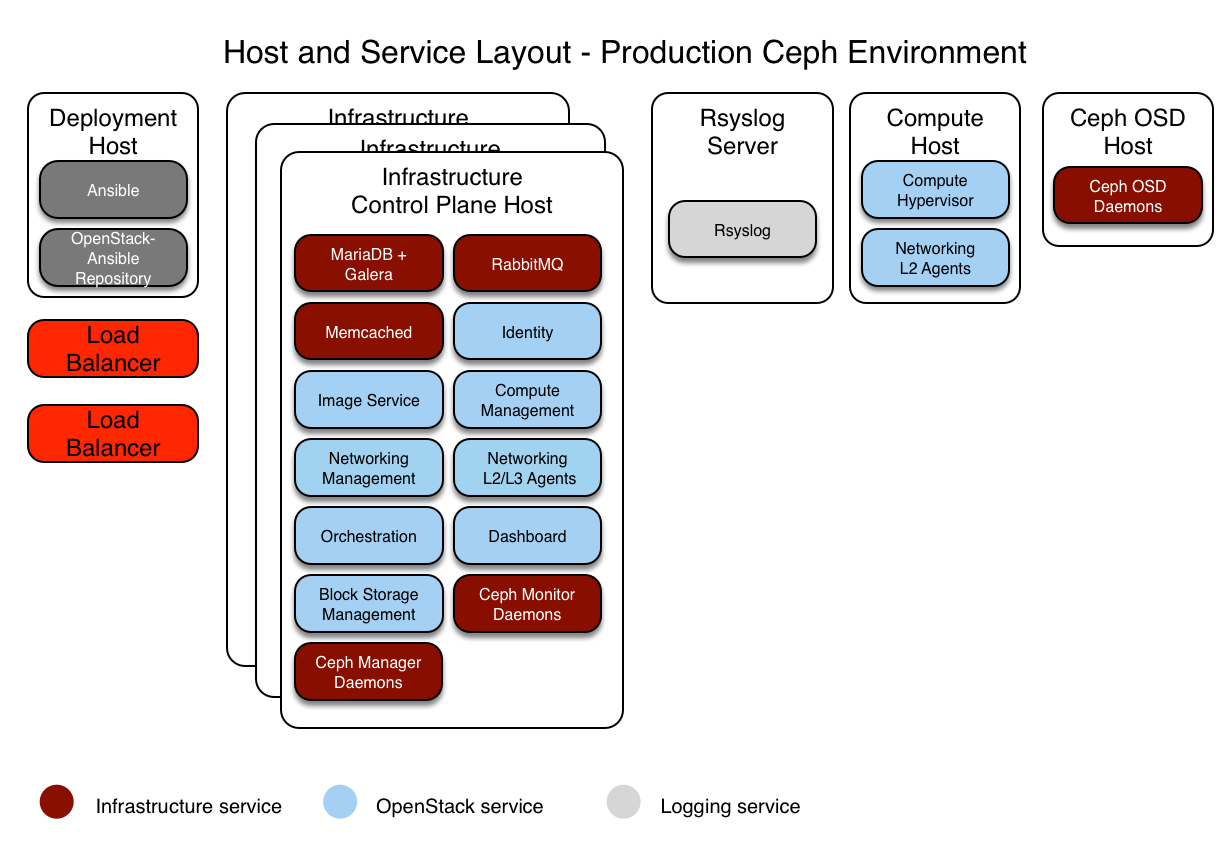

In diesem Abschnitt wird eine Beispielproduktionsumgebung für eine funktionierende OpenStack-Ansible-Bereitstellung (OSA) mit Hochverfügbarkeitsdiensten und die Verwendung des Ceph-Back-Ends für Abbilder, Datenträger und Instanzen beschrieben.

Diese Beispielumgebung weist die folgenden Merkmale auf:

Drei Infrastruktur (Kontrollebene) Hosts mit Ceph-Mon-Containern

Zwei Rechnerhosts

Drei Ceph OSD-Speicher-Hosts

Ein Protokollaggregationshost

Mehrere Netzwerkschnittstellenkarten (Network Interface Cards, NIC), die als verbundene Paare für jeden Host konfiguriert sind

Komplettes Compute-Kit mit dem Telemetrie-Service (Ceilometer) im Lieferumfang enthalten, wobei Ceph als Speicher-Back-End für die Image (glance) und Block Storage (Cinder) -Dienste konfiguriert ist

Internetzugang über die Router-Adresse 172.29.236.1 im Management-Netzwerk

Integration mit Ceph¶

OpenStack-Ansible erlaubt Ceph storage Cluster-Integration auf zwei Arten:

Verbinden Sie sich mit Ihrem eigenen Ceph-Cluster, indem Sie auf seine Informationen in

user_variables.ymlzeigenBereitstellen eines Ceph-Clusters unter Verwendung der vom Ceph-Ansible-Projekt verwalteten Rollen. Deployer können das

ceph-install-Playbook aktivieren, indem Hosts zu den Gruppenceph-mon_hosts` `, ` `ceph-osd_hostsund` ceph-rgw_hosts` inopenstack_user_config.ymlhinzugefügt werden. und dann konfigurieren Sie Ceph-Ansible spezifische vars in der OpenStack-Ansibleuser_variables.ymlDatei.

Dieses Beispiel konzentriert sich auf die Bereitstellung von OpenStack-Ansible und seines Ceph-Clusters.

Netzwerkkonfiguration¶

Netzwerk CIDR / VLAN Zuweisungen¶

Die folgenden CIDR- und VLAN-Zuweisungen werden für diese Umgebung verwendet.

Netzwerk |

CIDR |

VLAN |

|---|---|---|

Verwaltungsnetzwerk |

172.29.236.0/22 |

10 |

Tunnel (VXLAN) Netzwerk |

172.29.240.0/22 |

30 |

Speichernetzwerk |

172.29.244.0/22 |

20 |

IP-Zuweisungen¶

Die folgenden Hostnamen und IP-Adresszuweisungen werden für diese Umgebung verwendet.

Hostname |

Verwaltungs-IP |

Tunnel (VxLAN) IP |

Speicher-IP |

|---|---|---|---|

lb_vip_adresse |

172.29.236.9 |

||

infra1 |

172.29.236.11 |

172.29.240.11 |

|

infra2 |

172.29.236.12 |

172.29.240.12 |

|

infra3 |

172.29.236.13 |

172.29.240.13 |

|

log1 |

172.29.236.14 |

||

compute1 |

172.29.236.16 |

172.29.240.16 |

172.29.244.16 |

compute2 |

172.29.236.17 |

172.29.240.17 |

172.29.244.17 |

osd1 |

172.29.236.18 |

172.29.244.18 |

|

osd2 |

172.29.236.19 |

172.29.244.19 |

|

osd3 |

172.29.236.20 |

172.29.244.20 |

Host-Netzwerkkonfiguration¶

Für jeden Host müssen die richtigen Netzwerkbrücken implementiert werden. Das Folgende ist die /etc/network/interfaces Datei für `` infra1``.

Bemerkung

Wenn Ihre Umgebung nicht eth0, sondern p1p1 oder einen anderen Namen hat, stellen Sie sicher, dass alle Verweise auf eth0 in allen Konfigurationsdateien durch den entsprechenden Namen ersetzt werden. Gleiches gilt für zusätzliche Netzwerkschnittstellen.

# This is a multi-NIC bonded configuration to implement the required bridges

# for OpenStack-Ansible. This illustrates the configuration of the first

# Infrastructure host and the IP addresses assigned should be adapted

# for implementation on the other hosts.

#

# After implementing this configuration, the host will need to be

# rebooted.

# Assuming that eth0/1 and eth2/3 are dual port NIC's we pair

# eth0 with eth2 and eth1 with eth3 for increased resiliency

# in the case of one interface card failing.

auto eth0

iface eth0 inet manual

bond-master bond0

bond-primary eth0

auto eth1

iface eth1 inet manual

bond-master bond1

bond-primary eth1

auto eth2

iface eth2 inet manual

bond-master bond0

auto eth3

iface eth3 inet manual

bond-master bond1

# Create a bonded interface. Note that the "bond-slaves" is set to none. This

# is because the bond-master has already been set in the raw interfaces for

# the new bond0.

auto bond0

iface bond0 inet manual

bond-slaves none

bond-mode active-backup

bond-miimon 100

bond-downdelay 200

bond-updelay 200

# This bond will carry VLAN and VXLAN traffic to ensure isolation from

# control plane traffic on bond0.

auto bond1

iface bond1 inet manual

bond-slaves none

bond-mode active-backup

bond-miimon 100

bond-downdelay 250

bond-updelay 250

# Container/Host management VLAN interface

auto bond0.10

iface bond0.10 inet manual

vlan-raw-device bond0

# OpenStack Networking VXLAN (tunnel/overlay) VLAN interface

auto bond1.30

iface bond1.30 inet manual

vlan-raw-device bond1

# Storage network VLAN interface (optional)

auto bond0.20

iface bond0.20 inet manual

vlan-raw-device bond0

# Container/Host management bridge

auto br-mgmt

iface br-mgmt inet static

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports bond0.10

address 172.29.236.11

netmask 255.255.252.0

gateway 172.29.236.1

dns-nameservers 8.8.8.8 8.8.4.4

# OpenStack Networking VXLAN (tunnel/overlay) bridge

#

# The COMPUTE, NETWORK and INFRA nodes must have an IP address

# on this bridge.

#

auto br-vxlan

iface br-vxlan inet static

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports bond1.30

address 172.29.240.16

netmask 255.255.252.0

# OpenStack Networking VLAN bridge

auto br-vlan

iface br-vlan inet manual

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports bond1

# compute1 Network VLAN bridge

#auto br-vlan

#iface br-vlan inet manual

# bridge_stp off

# bridge_waitport 0

# bridge_fd 0

#

# For tenant vlan support, create a veth pair to be used when the neutron

# agent is not containerized on the compute hosts. 'eth12' is the value used on

# the host_bind_override parameter of the br-vlan network section of the

# openstack_user_config example file. The veth peer name must match the value

# specified on the host_bind_override parameter.

#

# When the neutron agent is containerized it will use the container_interface

# value of the br-vlan network, which is also the same 'eth12' value.

#

# Create veth pair, do not abort if already exists

# pre-up ip link add br-vlan-veth type veth peer name eth12 || true

# Set both ends UP

# pre-up ip link set br-vlan-veth up

# pre-up ip link set eth12 up

# Delete veth pair on DOWN

# post-down ip link del br-vlan-veth || true

# bridge_ports bond1 br-vlan-veth

# Storage bridge (optional)

#

# Only the COMPUTE and STORAGE nodes must have an IP address

# on this bridge. When used by infrastructure nodes, the

# IP addresses are assigned to containers which use this

# bridge.

#

auto br-storage

iface br-storage inet manual

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports bond0.20

# compute1 Storage bridge

#auto br-storage

#iface br-storage inet static

# bridge_stp off

# bridge_waitport 0

# bridge_fd 0

# bridge_ports bond0.20

# address 172.29.244.16

# netmask 255.255.252.0

Bereitstellungskonfiguration¶

Umgebungslayout¶

Die Datei /etc/openstack_deploy/openstack_user_config.yml definiert das Umgebungslayout.

Die folgende Konfiguration beschreibt das Layout für diese Umgebung.

---

cidr_networks: &cidr_networks

container: 172.29.236.0/22

tunnel: 172.29.240.0/22

storage: 172.29.244.0/22

used_ips:

- "172.29.236.1,172.29.236.50"

- "172.29.240.1,172.29.240.50"

- "172.29.244.1,172.29.244.50"

- "172.29.248.1,172.29.248.50"

global_overrides:

cidr_networks: *cidr_networks

internal_lb_vip_address: 172.29.236.9

#

# The below domain name must resolve to an IP address

# in the CIDR specified in haproxy_keepalived_external_vip_cidr.

# If using different protocols (https/http) for the public/internal

# endpoints the two addresses must be different.

#

external_lb_vip_address: openstack.example.com

management_bridge: "br-mgmt"

provider_networks:

- network:

container_bridge: "br-mgmt"

container_type: "veth"

container_interface: "eth1"

ip_from_q: "container"

type: "raw"

group_binds:

- all_containers

- hosts

is_container_address: true

- network:

container_bridge: "br-vxlan"

container_type: "veth"

container_interface: "eth10"

ip_from_q: "tunnel"

type: "vxlan"

range: "1:1000"

net_name: "vxlan"

group_binds:

- neutron_linuxbridge_agent

- network:

container_bridge: "br-vlan"

container_type: "veth"

container_interface: "eth12"

host_bind_override: "eth12"

type: "flat"

net_name: "flat"

group_binds:

- neutron_linuxbridge_agent

- network:

container_bridge: "br-vlan"

container_type: "veth"

container_interface: "eth11"

type: "vlan"

range: "101:200,301:400"

net_name: "vlan"

group_binds:

- neutron_linuxbridge_agent

- network:

container_bridge: "br-storage"

container_type: "veth"

container_interface: "eth2"

ip_from_q: "storage"

type: "raw"

group_binds:

- glance_api

- cinder_api

- cinder_volume

- manila_share

- nova_compute

- ceph-osd

###

### Infrastructure

###

_infrastructure_hosts: &infrastructure_hosts

infra1:

ip: 172.29.236.11

infra2:

ip: 172.29.236.12

infra3:

ip: 172.29.236.13

# nova hypervisors

compute_hosts: &compute_hosts

compute1:

ip: 172.29.236.16

compute2:

ip: 172.29.236.17

ceph-osd_hosts:

osd1:

ip: 172.29.236.18

osd2:

ip: 172.29.236.19

osd3:

ip: 172.29.236.20

# galera, memcache, rabbitmq, utility

shared-infra_hosts: *infrastructure_hosts

# ceph-mon containers

ceph-mon_hosts: *infrastructure_hosts

# ceph-mds containers

ceph-mds_hosts: *infrastructure_hosts

# ganesha-nfs hosts

ceph-nfs_hosts: *infrastructure_hosts

# repository (apt cache, python packages, etc)

repo-infra_hosts: *infrastructure_hosts

# load balancer

# Ideally the load balancer should not use the Infrastructure hosts.

# Dedicated hardware is best for improved performance and security.

haproxy_hosts: *infrastructure_hosts

# rsyslog server

log_hosts:

log1:

ip: 172.29.236.14

###

### OpenStack

###

# keystone

identity_hosts: *infrastructure_hosts

# cinder api services

storage-infra_hosts: *infrastructure_hosts

# cinder volume hosts (Ceph RBD-backed)

storage_hosts: *infrastructure_hosts

# glance

image_hosts: *infrastructure_hosts

# placement

placement-infra_hosts: *infrastructure_hosts

# nova api, conductor, etc services

compute-infra_hosts: *infrastructure_hosts

# heat

orchestration_hosts: *infrastructure_hosts

# horizon

dashboard_hosts: *infrastructure_hosts

# neutron server, agents (L3, etc)

network_hosts: *infrastructure_hosts

# ceilometer (telemetry data collection)

metering-infra_hosts: *infrastructure_hosts

# aodh (telemetry alarm service)

metering-alarm_hosts: *infrastructure_hosts

# gnocchi (telemetry metrics storage)

metrics_hosts: *infrastructure_hosts

# manila (share service)

manila-infra_hosts: *infrastructure_hosts

manila-data_hosts: *infrastructure_hosts

# ceilometer compute agent (telemetry data collection)

metering-compute_hosts: *compute_hosts

Umgebungsanpassungen¶

Die optional bereitgestellten Dateien in /etc/openstack_deploy/env.d ermöglichen die Anpassung von Ansible-Gruppen. Dadurch kann der Deployer festlegen, ob die Dienste in einem Container (Standard) oder auf dem Host (auf Metall) ausgeführt werden.

Für eine Ceph-Umgebung können Sie das Cinder-Volume in einem Container ausführen. Dazu müssen Sie eine Datei /etc/openstack_deploy/env.d/cinder.yml mit folgendem Inhalt erstellen:

---

# This file contains an example to show how to set

# the cinder-volume service to run in a container.

#

# Important note:

# When using LVM or any iSCSI-based cinder backends, such as NetApp with

# iSCSI protocol, the cinder-volume service *must* run on metal.

# Reference: https://bugs.launchpad.net/ubuntu/+source/lxc/+bug/1226855

container_skel:

cinder_volumes_container:

properties:

is_metal: false

Benutzervariablen¶

Die Datei /etc/openstack_deploy/user_variables.yml definiert die globalen Überschreibungen für die Standardvariablen.

Für diese Beispielumgebung konfigurieren wir einen HA-Lastenausgleich. Wir implementieren den Load Balancer (HAProxy) mit einer HA-Schicht (keepalived) auf den Infrastrukturhosts. Ihr /etc/openstack_deploy/user_variables.yml muss den folgenden Inhalt haben, um haproxy, keepalived und ceph zu konfigurieren:

---

# Because we have three haproxy nodes, we need

# to one active LB IP, and we use keepalived for that.

## Load Balancer Configuration (haproxy/keepalived)

haproxy_keepalived_external_vip_cidr: "<external_ip_address>/<netmask>"

haproxy_keepalived_internal_vip_cidr: "172.29.236.0/22"

haproxy_keepalived_external_interface: ens2

haproxy_keepalived_internal_interface: br-mgmt

## Ceph cluster fsid (must be generated before first run)

## Generate a uuid using: python -c 'import uuid; print(str(uuid.uuid4()))'

generate_fsid: false

fsid: 116f14c4-7fe1-40e4-94eb-9240b63de5c1 # Replace with your generated UUID

## ceph-ansible settings

## See https://github.com/ceph/ceph-ansible/tree/master/group_vars for

## additional configuration options available.

monitor_address_block: "{{ cidr_networks.container }}"

public_network: "{{ cidr_networks.container }}"

cluster_network: "{{ cidr_networks.storage }}"

journal_size: 10240 # size in MB

# ceph-ansible automatically creates pools & keys for OpenStack services

openstack_config: true

cinder_ceph_client: cinder

glance_ceph_client: glance

glance_default_store: rbd

glance_rbd_store_pool: images

nova_libvirt_images_rbd_pool: vms

cinder_backends:

RBD:

volume_driver: cinder.volume.drivers.rbd.RBDDriver

rbd_pool: volumes

rbd_ceph_conf: /etc/ceph/ceph.conf

rbd_store_chunk_size: 8

volume_backend_name: rbddriver

rbd_user: "{{ cinder_ceph_client }}"

rbd_secret_uuid: "{{ cinder_ceph_client_uuid }}"

report_discard_supported: true