6.17. OpenStack Networking (Neutron) control plane performance report for 400 nodes¶

- Abstract

This document includes OpenStack Networking (aka Neutron) control plane performance test results against two OpenStack environments: 200 nodes and 378 nodes. All tests have been performed regarding OpenStack Networking (Neutron) control plane performance test plan

6.17.1. Environment description¶

6.17.1.1. Lab A (200 nodes)¶

3 controllers, 196 computes, 1 node for Grafana/Prometheus

6.17.1.1.1. Hardware configuration of each server¶

server |

vendor,model |

Supermicro MBD-X10DRI |

CPU |

vendor,model |

Intel Xeon E5-2650v3 |

processor_count |

2 |

|

core_count |

10 |

|

frequency_MHz |

2300 |

|

RAM |

vendor,model |

8x Samsung M393A2G40DB0-CPB |

amount_MB |

2097152 |

|

NETWORK |

vendor,model |

Intel,I350 Dual Port |

bandwidth |

1G |

|

vendor,model |

Intel,82599ES Dual Port |

|

bandwidth |

10G |

|

STORAGE |

vendor,model |

Intel SSD DC S3500 Series |

SSD/HDD |

SSD |

|

size |

240GB |

|

vendor,model |

2x WD WD5003AZEX |

|

SSD/HDD |

HDD |

|

size |

500GB |

server |

vendor,model |

SUPERMICRO 5037MR-H8TRF |

CPU |

vendor,model |

INTEL XEON Ivy Bridge 6C E5-2620 |

processor_count |

1 |

|

core_count |

6 |

|

frequency_MHz |

2100 |

|

RAM |

vendor,model |

4x Samsung DDRIII 8GB DDR3-1866 |

amount_MB |

32768 |

|

NETWORK |

vendor,model |

AOC-STGN-i2S - 2-port |

bandwidth |

10G |

|

STORAGE |

vendor,model |

Intel SSD DC S3500 Series |

SSD/HDD |

SSD |

|

size |

80GB |

|

vendor,model |

1x WD Scorpio Black BP WD7500BPKT |

|

SSD/HDD |

HDD |

|

size |

750GB |

6.17.1.2. Lab B (378 nodes)¶

Environment contains 4 types of servers:

rally node

controller node

compute-osd node

compute node

Role |

Servers count |

Type |

|---|---|---|

rally |

1 |

1 or 2 |

controller |

3 |

1 or 2 |

compute |

291 |

1 or 2 |

compute-osd |

34 |

3 |

compute-osd |

49 |

1 |

6.17.1.2.1. Hardware configuration of each server¶

All servers have 3 types of configuration describing in table below

server |

vendor,model |

Dell PowerEdge R630 |

CPU |

vendor,model |

Intel,E5-2680 v3 |

processor_count |

2 |

|

core_count |

12 |

|

frequency_MHz |

2500 |

|

RAM |

vendor,model |

Samsung, M393A2G40DB0-CPB |

amount_MB |

262144 |

|

NETWORK |

interface_name s |

eno1, eno2 |

vendor,model |

Intel,X710 Dual Port |

|

bandwidth |

10G |

|

interface_names |

enp3s0f0, enp3s0f1 |

|

vendor,model |

Intel,X710 Dual Port |

|

bandwidth |

10G |

|

STORAGE |

dev_name |

/dev/sda |

vendor,model |

raid1 - Dell, PERC H730P Mini

2 disks Intel S3610

|

|

SSD/HDD |

SSD |

|

size |

3,6TB |

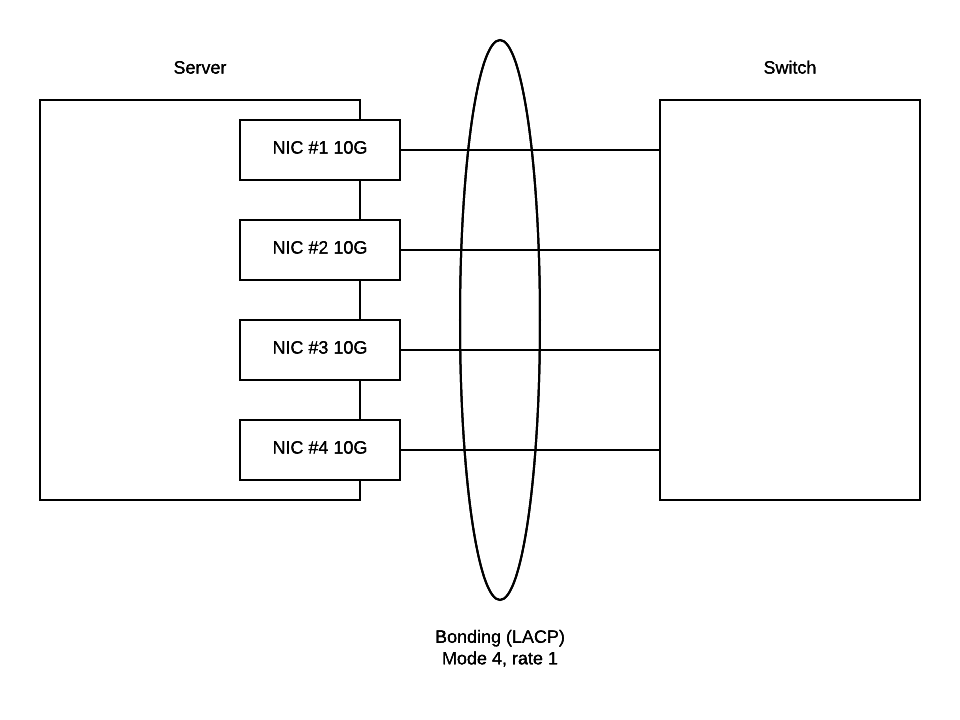

6.17.1.2.2. Network configuration of each server¶

All servers have same network configuration:

6.17.1.2.3. Software configuration on servers with controller, compute and compute-osd roles¶

Role |

Service name |

|---|---|

controller |

horizon

keystone

nova-api

nava-scheduler

nova-cert

nova-conductor

nova-consoleauth

nova-consoleproxy

cinder-api

cinder-backup

cinder-scheduler

cinder-volume

glance-api

glance-glare

glance-registry

neutron-dhcp-agent

neutron-l3-agent

neutron-metadata-agent

neutron-openvswitch-agent

neutron-server

heat-api

heat-api-cfn

heat-api-cloudwatch

ceph-mon

rados-gw

memcached

rabbitmq_server

mysqld

galera

corosync

pacemaker

haproxy

|

compute |

nova-compute

neutron-l3-agent

neutron-metadata-agent

neutron-openvswitch-agent

|

compute-osd |

nova-compute

neutron-l3-agent

neutron-metadata-agent

neutron-openvswitch-agent

ceph-osd

|

Software |

Version |

|---|---|

OpenStack |

Mitaka |

Ceph |

Hammer |

Ubuntu |

Ubuntu 14.04.3 LTS |

You can find outputs of some commands and /etc folder in the following archives:

controller-1.tar.gzcontroller-2.tar.gzcontroller-3.tar.gzcompute-1.tar.gzcompute-osd-1.tar.gz6.17.1.2.4. Software configuration on servers with Rally role¶

On this server should be installed Rally. How to do it you can find in Rally installation documentation

Software |

Version |

|---|---|

Rally |

0.5.0 |

Ubuntu |

Ubuntu 14.04.3 LTS |

6.17.2. Test results¶

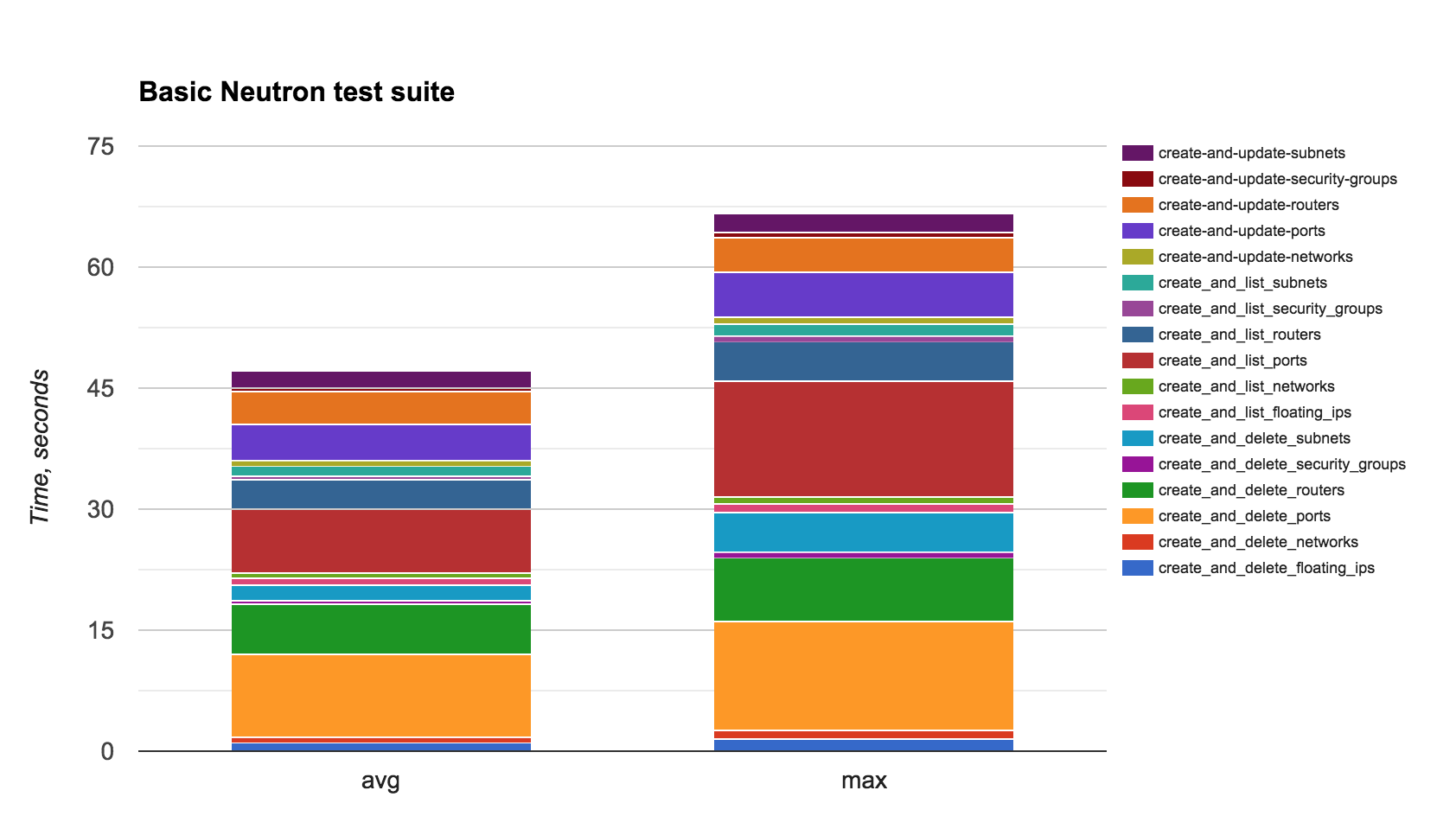

6.17.2.1. Test Case 1: Basic Neutron test suite¶

The following list of tests were run with the default configuration against Lab A (200 nodes):

create-and-list-floating-ips

create-and-list-networks

create-and-list-ports

create-and-list-routers

create-and-list-security-groups

create-and-list-subnets

create-and-delete-floating-ips

create-and-delete-networks

create-and-delete-ports

create-and-delete-routers

create-and-delete-security-groups

create-and-delete-subnets

create-and-update-networks

create-and-update-ports

create-and-update-routers

create-and-update-security-groups

create-and-update-subnets

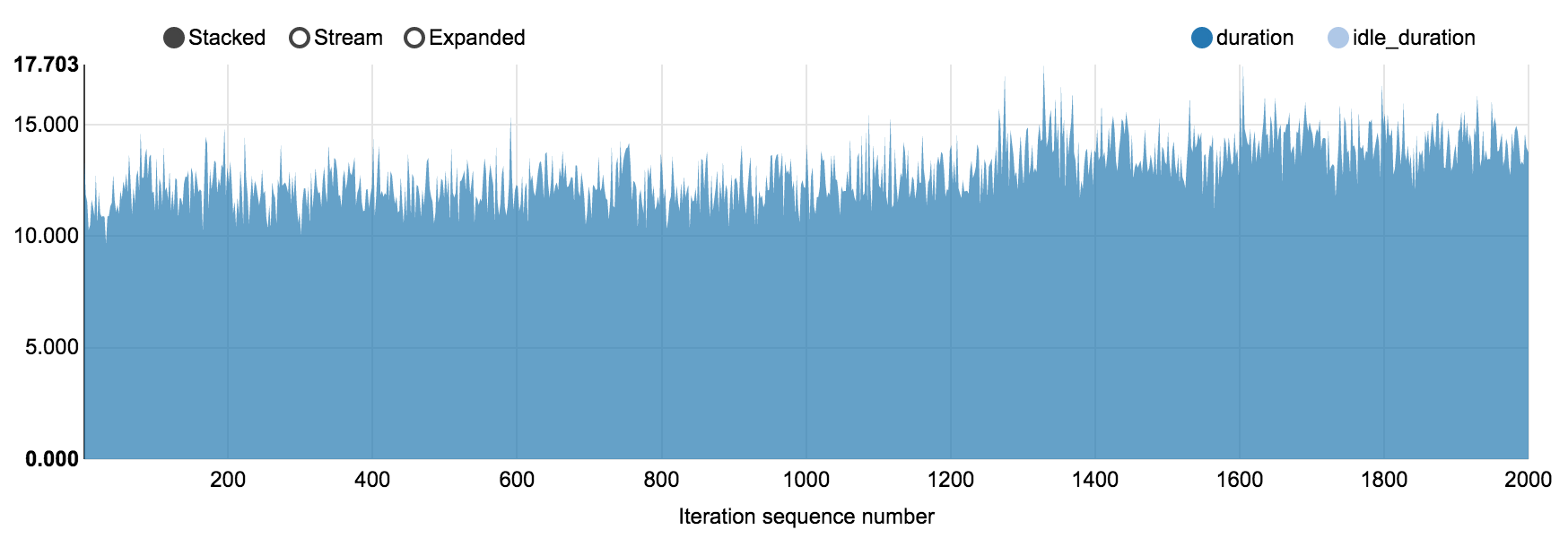

The time needed for each scenario can be comparatively presented using the following chart:

To overview extended information please download the following report:

basic_neutron.html

6.17.2.2. Test Case 2: Stressful Neutron test suite¶

The following list of tests were run against both Lab A (200 nodes) and Lab B (378 nodes):

create-and-list-networks

create-and-list-ports

create-and-list-routers

create-and-list-security-groups

create-and-list-subnets

boot-and-list-server

boot-and-delete-server-with-secgroups

boot-runcommand-delete

Here is short representation of the collected results:

Scenario |

Iterations/concurrency |

Time, sec |

Errors |

|||

|---|---|---|---|---|---|---|

Lab A |

Lab B |

Lab A |

Lab B |

Lab A |

Lab B |

|

create-and-list-networks |

3000/50 |

5000/50 |

avg 2.375 max 7.904 |

avg 3.654 max 11.669 |

1 Internal server error while processing your request |

6 Internal server error while processing your request |

create-and-list-ports |

1000/50 |

2000/50 |

avg 123.97 max 277.977 |

avg 99.274 max 270.84 |

1 Internal server error while processing your request |

0 |

create-and-list-routers |

2000/50 |

2000/50 |

avg 15.59 max 29.006 |

avg 12.94 max 19.398 |

0 |

0 |

create-and-list-security-groups |

50/1 |

1000/50 |

avg 210.706 max 210.706 |

avg 68.712 max 169.315 |

0 |

0 |

create-and-list-subnets |

2000/50 |

2000/50 |

avg 25.973 max 64.553 |

avg 17.415 max 50.415 |

1 Internal server error while processing your request |

0 |

boot-and-list-server |

4975/50 |

1000/50 |

avg 21.445 max 40.736 |

avg 14.375 max 25.21 |

0 |

0 |

boot-and-delete-server-with-secgroups |

4975/200 |

1000/100 |

avg 190.772 max 443.518 |

avg 65.651 max 95.651 |

394 Server has ERROR status; The server didn’t respond in time. |

0 |

boot-runcommand-delete |

2000/15 |

3000/50 |

avg 28.39 max 35.756 |

avg 28.587 max 85.659 |

34 Rally tired waiting for Host ip:<ip> to become (‘ICMP UP’), current status (‘ICMP DOWN’) |

1 Resource <Server: s_rally_b58e9bd e_Y369JdPf> has ERROR status. Deadlock found when trying to get lock. |

During execution of Rally were filed and fixed bugs affecting boot-and-delete-server-with-secgroups and boot-runcommand-delete scenarios on Lab A:

Bug LP #1610303 l2pop mech fails to update_port_postcommit on a loaded cluster , fix - https://review.openstack.org/353835

Bug LP #1614452 Port create time grows at scale due to dvr arp update , fix - https://review.openstack.org/357052

With these fixes applied on Lab B mentioned Rally scenarios passed successfully.

Other bugs that were faced:

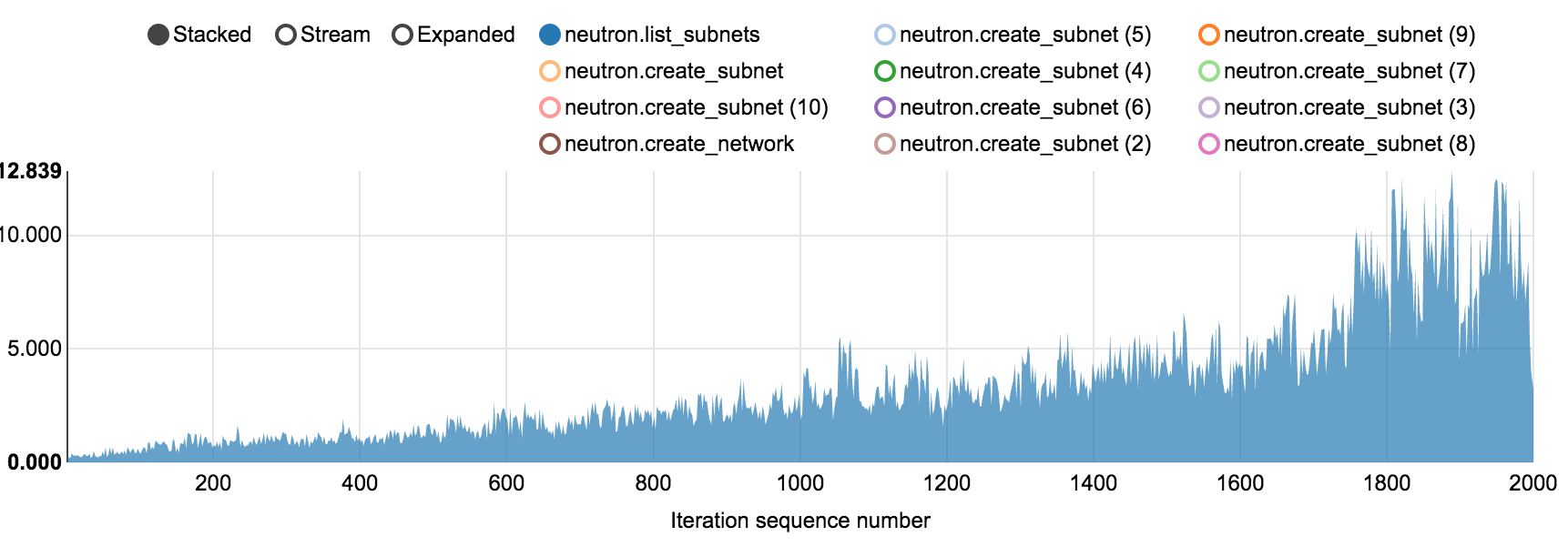

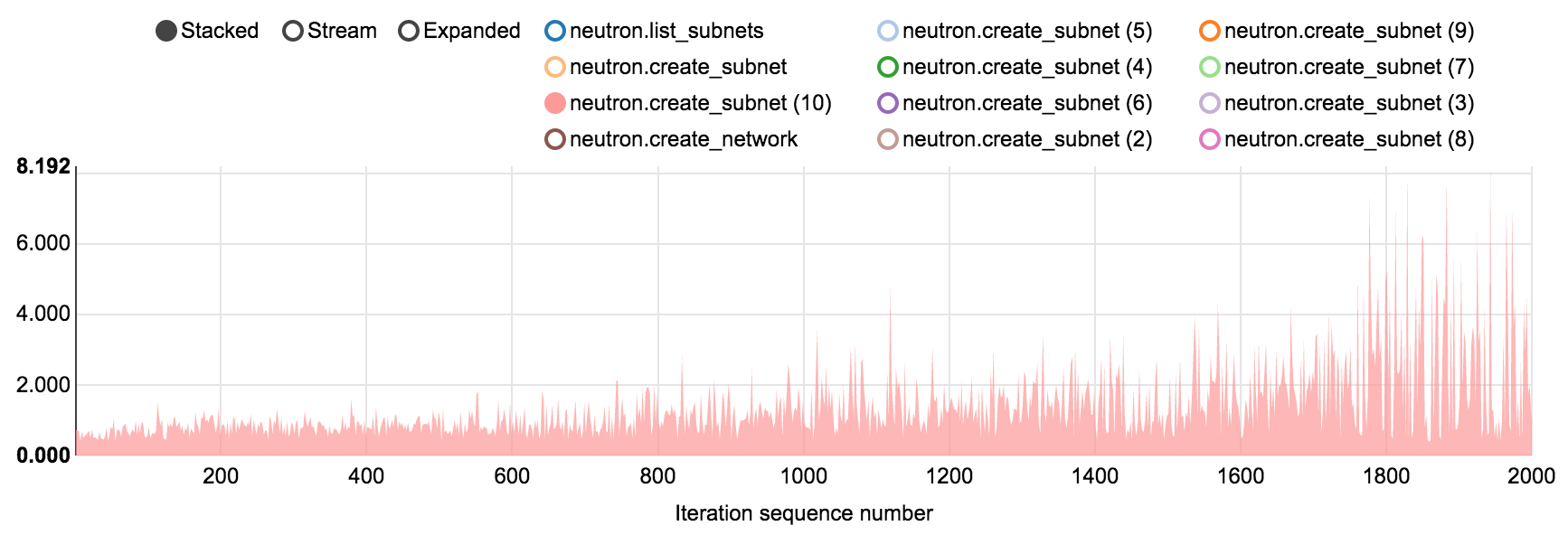

6.17.2.2.1. Observed trends¶

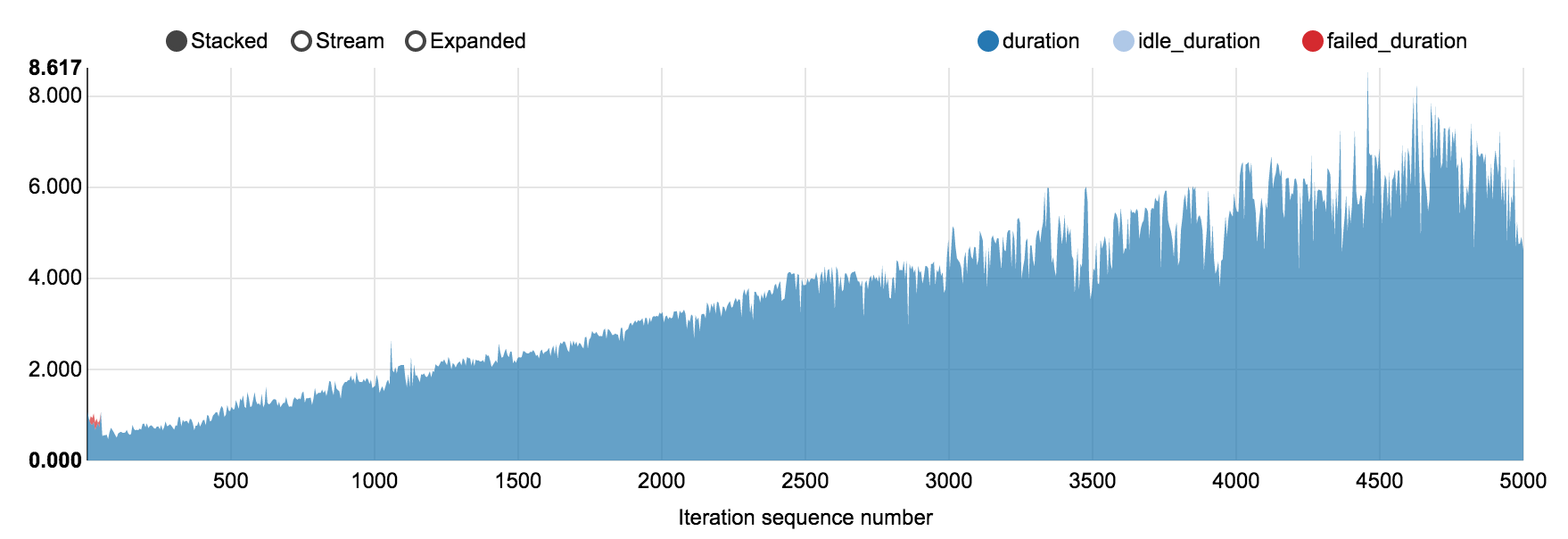

Create and list networks: the total time spent on each iteration grows linearly

Create and list routers: router list operation time gradually grows from 0.12 to 1.5 sec (2000 iterations).

Create and list routers: total load duration remains line-rate

Create and list subnets: subnet list operation time increases ~ after 1750 iterations (4.5 sec at 1700th iteration to 10.48 at 1800th iteration).

Create and list subnets: creating subnets has time peaks after 1750 iterations

Create and list security groups: secgroup list operation exposes the most rapid growth rate with time increasing from 0.548 sec in first iteration to over 10 sec in last iterations

More details can be found in original Rally report:

stress_neutron.html

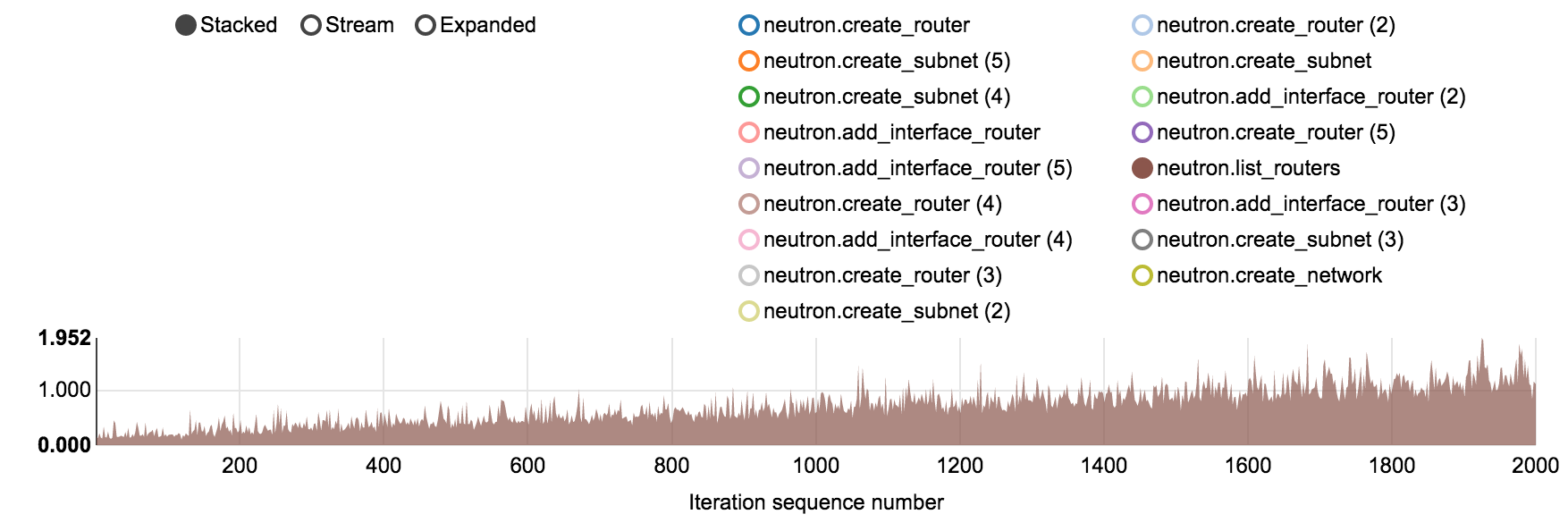

6.17.2.3. Test case 3: Neutron scalability test with many networks¶

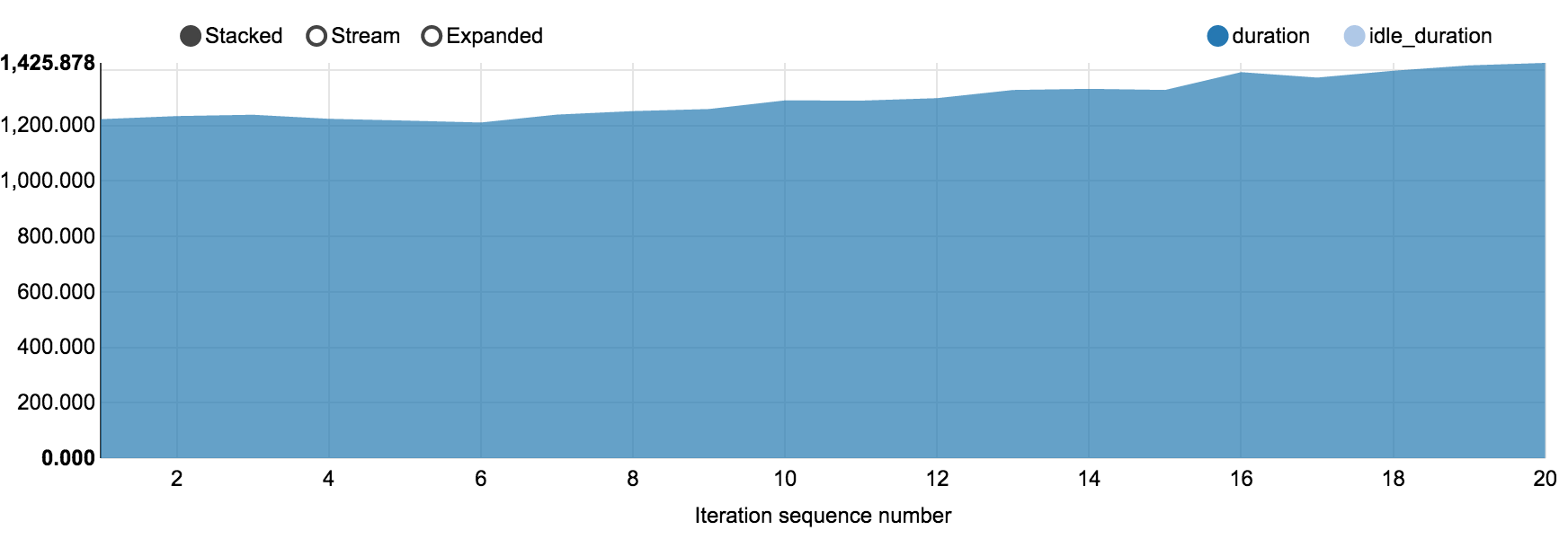

In our tests 100 networks (each with a subnet, router and a VM) were created per each iteration.

Iterations/concurrency |

Avg time, sec |

Max time, sec |

Errors |

|---|---|---|---|

10/1 |

1237.389 |

1294.549 |

0 |

20/3 |

1298.611 |

1425.878 |

1 HTTPConnectionPool Read time out |

Load graph for run with 20 iterations/concurrency 3:

More details can be found in original Rally report:

scale_neutron_networks.html

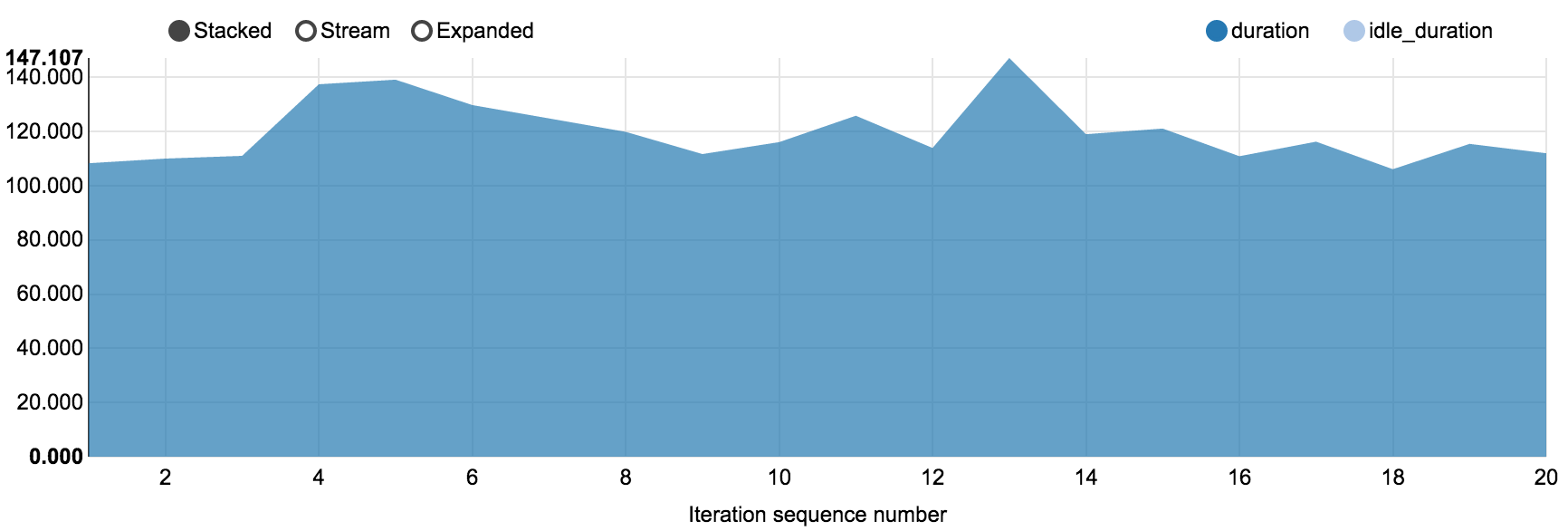

6.17.2.4. Test case 4: Neutron scalability test with many servers¶

During each iteration this test creates huge number of VMs (100 in our case) per a single network, hence it is possible to check the case with many number of ports per subnet.

Iterations/concurrency |

Avg time, sec |

Max time, sec |

Errors |

|---|---|---|---|

10/1 |

100.422 |

104.315 |

0 |

20/3 |

119.767 |

147.107 |

0 |

Load graph for run with 20 iterations/concurrency 3:

More details can be found in original Rally report:

scale_neutron_servers.html