Install vexxhost magnum-cluster-api driver¶

About this repository¶

This repository includes playbooks and roles to deploy the Vexxhost magnum-cluster-api driver for the OpenStack Magnum service.

The playbooks create a complete deployment including the control plane k8s cluster which should result in a ready-to-go experience for operators.

The following architectural features are present:

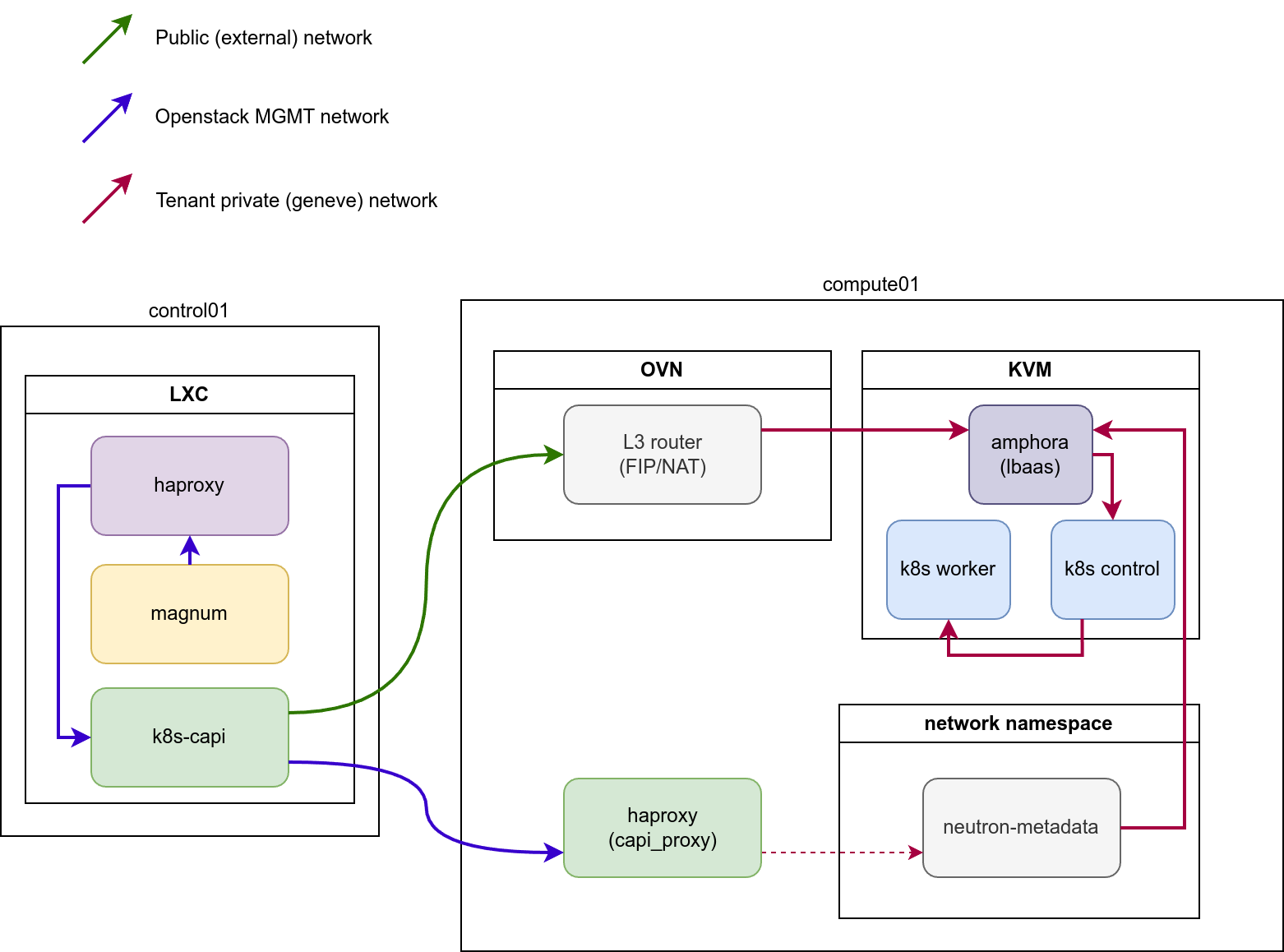

The control plane k8s cluster is an integral part of the openstack-ansible deployment, and forms part of the foundational components alongside mariadb and rabbitmq.

The control plane k8s cluster is deployed on the infra hosts and integrated with the haproxy loadbalancer and OpenStack internal API endpoint, and not exposed outside of the deployment

SSL is supported between all components and configuration is possible to support different certificate authorities on the internal and external loadbalancer endpoints.

Control plane traffic can stay entirely within the management network if required

The magnum-cluster-api-proxy service is deployed to allow communication between the control plane and workload clusters when a floating IP is not attached to the workload cluster.

It is possible to do a completely offline install for airgapped environments

The magnum-cluster-api driver for magnum can be found here https://github.com/vexxhost/magnum-cluster-api

Documentation for the Vexxhost magnum-cluster-api driver is here https://vexxhost.github.io/magnum-cluster-api/

The ansible collection used to deploy the controlplane k8s cluster is here https://github.com/vexxhost/ansible-collection-kubernetes

The ansible collection used to deploy the container runtime for the controlplane k8s cluster is here https://github.com/vexxhost/ansible-collection-containers

These playbooks require Openstack-Ansible Caracal or later.

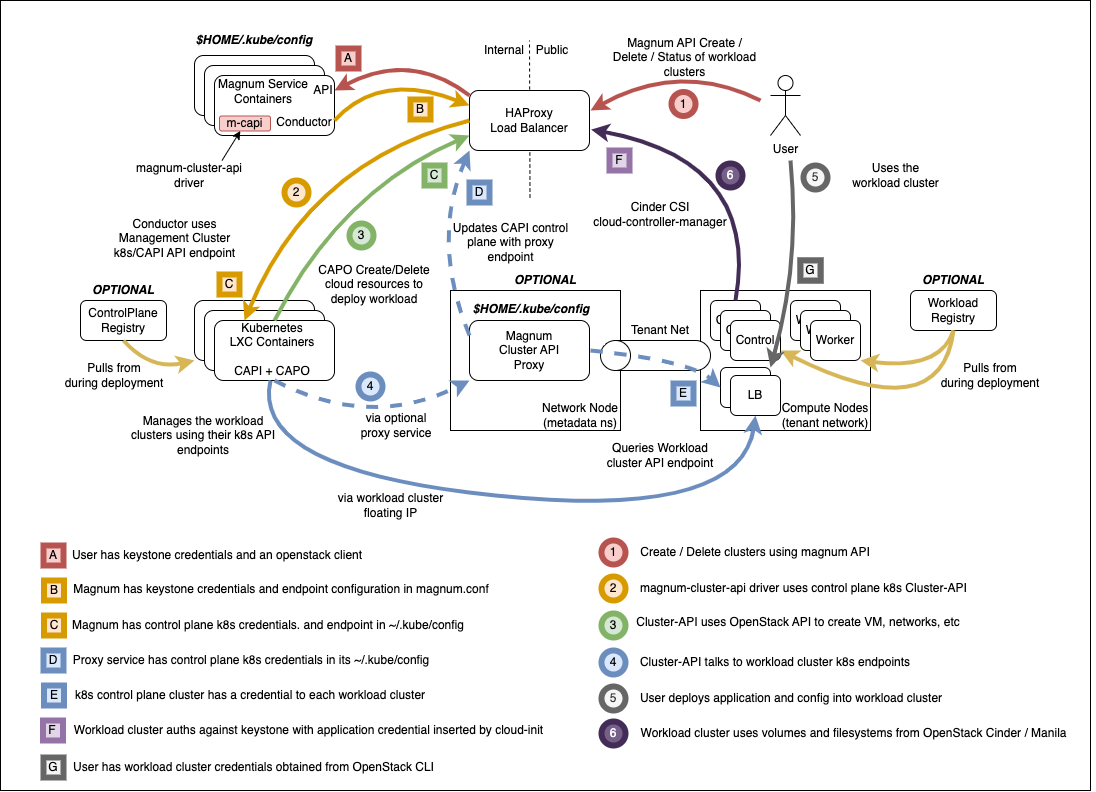

Highlevel overview of the Magnum infrastructure these playbooks will build and operate against.

Pre-requisites¶

An existing openstack-ansible deployment

Control plane using LXC containers, bare metal deployment is not tested

Core openstack services plus Octavia

OpenStack-Ansible Integration¶

The playbooks are distributed as an ansible collection, and integrate with Openstack-Ansible by adding the collection to the deployment host by adding the following to /etc/openstack_deploy/user-collection-requirements.yml under the collections key.

collections:

- name: vexxhost.kubernetes

source: https://github.com/vexxhost/ansible-collection-kubernetes

type: git

version: main

The collections can then be installed with the following command:

cd /opt/openstack-ansible openstack-ansible scripts/get-ansible-collection-requirements.yml

The modules in the kubernetes collection require an additional python module to be present in the ansible-runtime python virtual environment. Specify this in /etc/openstack_deploy/user-ansible-venv-requirements.txt

docker-image-py

kubernetes

OpenStack-Ansible configuration for magnum-cluster-api driver¶

Define the physical hosts that will host the controlplane k8s cluster in /etc/openstack_deploy/conf.d/k8s.yml. This example is for an all-in-one deployment and should be adjusted to match a real deployment with multiple hosts if high availability is required.

cluster-api_hosts:

aio1:

ip: 172.29.236.100

Integrate the control plane k8s cluster with the haproxy loadbalancer in /etc/openstack_deploy/group_vars/k8s_all/haproxy_service.yml

---

# Copyright 2023, BBC R&D

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

haproxy_k8s_service:

haproxy_service_name: k8s

haproxy_backend_nodes: "{{ groups['k8s_all'] | default([]) }}"

haproxy_ssl: false

haproxy_ssl_all_vips: false

haproxy_port: 6443

haproxy_balance_type: tcp

haproxy_balance_alg: leastconn

haproxy_interval: '15000'

haproxy_backend_port: 6443

haproxy_backend_rise: 2

haproxy_backend_fall: 2

haproxy_timeout_server: '15m'

haproxy_timeout_client: '5m'

haproxy_backend_options:

- tcplog

- ssl-hello-chk

- log-health-checks

- httpchk GET /healthz

haproxy_backend_httpcheck_options:

- 'send hdr User-Agent "osa-haproxy-healthcheck" meth GET uri /healthz'

haproxy_backend_server_options:

- check-ssl

- verify none

haproxy_service_enabled: "{{ groups['k8s_all'] is defined and groups['k8s_all'] | length > 0 }}"

k8s_haproxy_services:

- "{{ haproxy_k8s_service | combine(haproxy_k8s_service_overrides | default({})) }}"

Configure the LXC container that will host the control plane k8s cluster to be suitable for running nested containers in /etc/openstack_deploy/group_vars/k8s_all/main.yml

There you can also set config-overrides for the control plane of the k8s cluster, which integrate the control plane k8s deployment with the rest of the openstack-ansible deployment.

---

# Pick a range of addresses for cilium that do not collide with anything else

cilium_ipv4_cidr: 172.29.200.0/22

# Set a clusterctl version. Supported list can be found in defaults:

# https://github.com/vexxhost/ansible-collection-kubernetes/blob/main/roles/clusterctl/defaults/main.yml

clusterctl_version: 1.10.5

cluster_api_version: 1.10.5

cluster_api_infrastructure_provider: openstack

cluster_api_infrastructure_version: 0.12.4

# wire OSA group, host and network addresses into k8s deployment

kubelet_hostname: "{{ ansible_facts['hostname'] | lower }}"

kubelet_node_ip: "{{ management_address }}"

kubernetes_control_plane_group: k8s_container

kubernetes_hostname: "{{ internal_lb_vip_address }}"

kubernetes_non_init_namespace: true

# Define k8s version for the control cluster

kubernetes_version: 1.33.5

# Define LXC container overrides

lxc_container_config_list:

- "lxc.apparmor.profile=unconfined"

lxc_container_mount_auto:

- "proc:rw"

- "sys:rw"

# Set this manually, or kube-proxy will try to do this - not possible

# in a non-init namespace and will fail in LXC

openstack_host_nf_conntrack_max: 1572864

# OSA containers dont run ssh by default so cannot use synchronize

upload_helm_chart_method: copy

# Enable periodic cluster API state collection (note: this is not a guaranteed functional backup)

# See https://cluster-api.sigs.k8s.io/clusterctl/commands/move

cluster_api_backups_enabled: False

Set up config-overrides for the magnum service in /etc/openstack_deploy/group_vars/magnum_all/main.yml. Adjust the images and flavors here as necessary, these are just for demonstration. Upload as many images as you need for the different workload cluster kubernetes versions.

Attention must be given to the SSL configuration. Users and workload clusters will interact with the external endpoint and must trust the SSL certificate. The magnum service and cluster-api can be configured to interact with either the external or internal endpoint and must trust the SSL certificiate. Depending on the environment, these may be derived from different certificate authorities.

---

# Copyright 2020, VEXXHOST, Inc.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

magnum_magnum_cluster_api_git_install_branch: v0.33.0

magnum_magnum_cluster_api_git_repo: "{{ openstack_github_base_url | default('https://github.com') ~ '/vexxhost/magnum-cluster-api' }}"

# install magnum-cluster-api and kubernetes python package into magnum venv

magnum_user_pip_packages:

- "git+{{ magnum_magnum_cluster_api_git_repo }}@{{ magnum_magnum_cluster_api_git_install_branch }}#egg=magnum-cluster-api"

- kubernetes

# ensure that the internal VIP CA is trusted by the CAPI driver

magnum_config_overrides:

drivers:

# Supply a custom CA file which will be passed and used exclusively on all workload nodes

# System trust will be used by default

openstack_ca_file: '/usr/local/share/ca-certificates/ExampleCorpRoot.crt'

capi_client:

# Supply a CA that will be used exclusively for connections towards

# OpenStack public and internal endpoints.

ca_file: '/usr/local/share/ca-certificates/ExampleCorpRoot.crt'

endpoint: 'internalURL'

cluster_template:

kubernetes_allowed_network_drivers: 'calico'

kubernetes_default_network_driver: 'calico'

certificates:

cert_manager_type: x509keypair

nova_client:

# ideally magnum would request an appropriate microversion for nova in it's client code

api_version: '2.15'

Run the deployment¶

For a new deployment¶

Run the OSA setup playbooks as usual, following the normal deployment guide.

Run the magnum-cluster-api deployment

openstack-ansible osa_ops.mcapi_vexxhost.k8s_install

For an existing deployment¶

Ensure that the python modules required for ansible are present:

./scripts/bootstrap-ansible.sh

Alternatively, without re-running the bootstrap script:

/opt/ansible-runtime/bin/pip install docker-image-py

Add the magnum-cluser-api driver to the magnum service

openstack-ansible openstack.osa.magnum

Create the k8s control plane containers

openstack-ansible openstack.osa.containers_lxc_create --limit k8s_all

Run the magnum-cluster-api deployment

openstack-ansible osa_ops.mcapi_vexxhost.k8s_install

Optionally run a functional test of magnum-cluster-api¶

This can be done quickly using the following playbook

openstack-ansible osa_ops.mcapi_vexxhost.functional_test

This playbook will create a neutron public network, download a prebuilt k8s glance image, create a nova flavor and a magnum cluster template.

It will then deploy the workload k8s cluster using magnum, and run a sonobouy “quick mode” test of the workload cluster.

This playbook is intended to be used on an openstack-ansible all-in-one deployment.

Use Magnum to create a workload cluster¶

Upload Images

Create a cluster template

Create a workload cluster

Optional Components¶

Use of magnum-cluster-api-proxy¶

As the control plane k8s cluster need to access a k8s control plane of tenant cluster for it’s further configuration, the only way to do it out of the box is through the public network (Floating IP). This means, that API of the k8s control plane must be globally reachable, which posses a security threat to such tenant clusters.

On order to solve the issue and provide access for the control plane k8s cluster to tenant clusters inside their internal networks a proxy service is introduced.

Proxy service must be spawned on hosts, where Neutron Metadata agents are

spawned. For LXB/OVS these are members of neutron-agent_hosts, while

for OVN the service should be installed to all compute_hosts

(or neutron_ovn_controller).

The service will configure own HAProxy instance and create backends for managed k8s clusters to point inside corresponding network namespaces. Service does not spawn own namespaces, but leverages already existing metadata namespaces to get connection to the Load Balancer inside the tenant network.

Configuration of the service is relatively trivial:

# Define a group of hosts where to install the service. # OVN: compute_hosts / neutron_ovn_controller # OVS/LXB: neutron_metadata_agent mcapi_vexxhost_proxy_hosts: compute_hosts # Define address and port HAProxy instance to listen on mcapi_vexxhost_proxy_environment: PROXY_BIND: "{{ management_address }}" PROXY_PORT: 44355

Also, in case of proxy service deployment, ensure that variable

magnum_magnum_cluster_api_git_install_branch is defined

for the mcapi_vexxhost_proxy_hosts as well, or align value of the

magnum_magnum_cluster_api_git_install_branch with

mcapi_vexxhost_proxy_install_branch to avoid conflicts caused by different

versions of driver used.

Once configuration is complete, you can run the playbook:

openstack-ansible osa_ops.mcapi_vexxhost.mcapi_proxy

Deploy the workload clusters with a local registry¶

TODO - describe how to do this

Deploy the control plane cluster from a local registry¶

TODO - describe how to do this

Troubleshooting¶

Local testing¶

An OpenStack-Ansible all-in-one configured with Magnum and Octavia is capable of running a functioning magnum-cluster-api deployment.

Sufficient memory should be available beyond the minimum 8G usually required for an all-in-one. A multinode workload cluster may require nova to boot several Ubuntu images in addition to an Octavia loadbalancer instance. 64G would be an appropriate amount of system RAM.

There also must be sufficient disk space in /var/lib/nova/instances to support the required number of instances - the normal minimum of 60G required for an all-in-one deployment will be insufficient, 500G would be plenty.